Over the past year, I’ve been working with my team on how artificial intelligence can help retailers reduce the cost of returns. This is no small problem. Returns are expensive, complex, and often feel unsolvable. For many retailers, the priority has always been simple facilitation, aka getting an item back from B to A. But what if we could go further? What if AI could help retailers decide if a return is worth processing in the first place, and guide them towards the most cost-effective way to handle it?

The potential was there. Retailers we spoke with were excited. I ran workshops at our internal customer conference to test the vision, and the feedback was consistent: the opportunity resonated. But as we soon learned, having a strong idea is just the start. Building it out is where the real work begins.

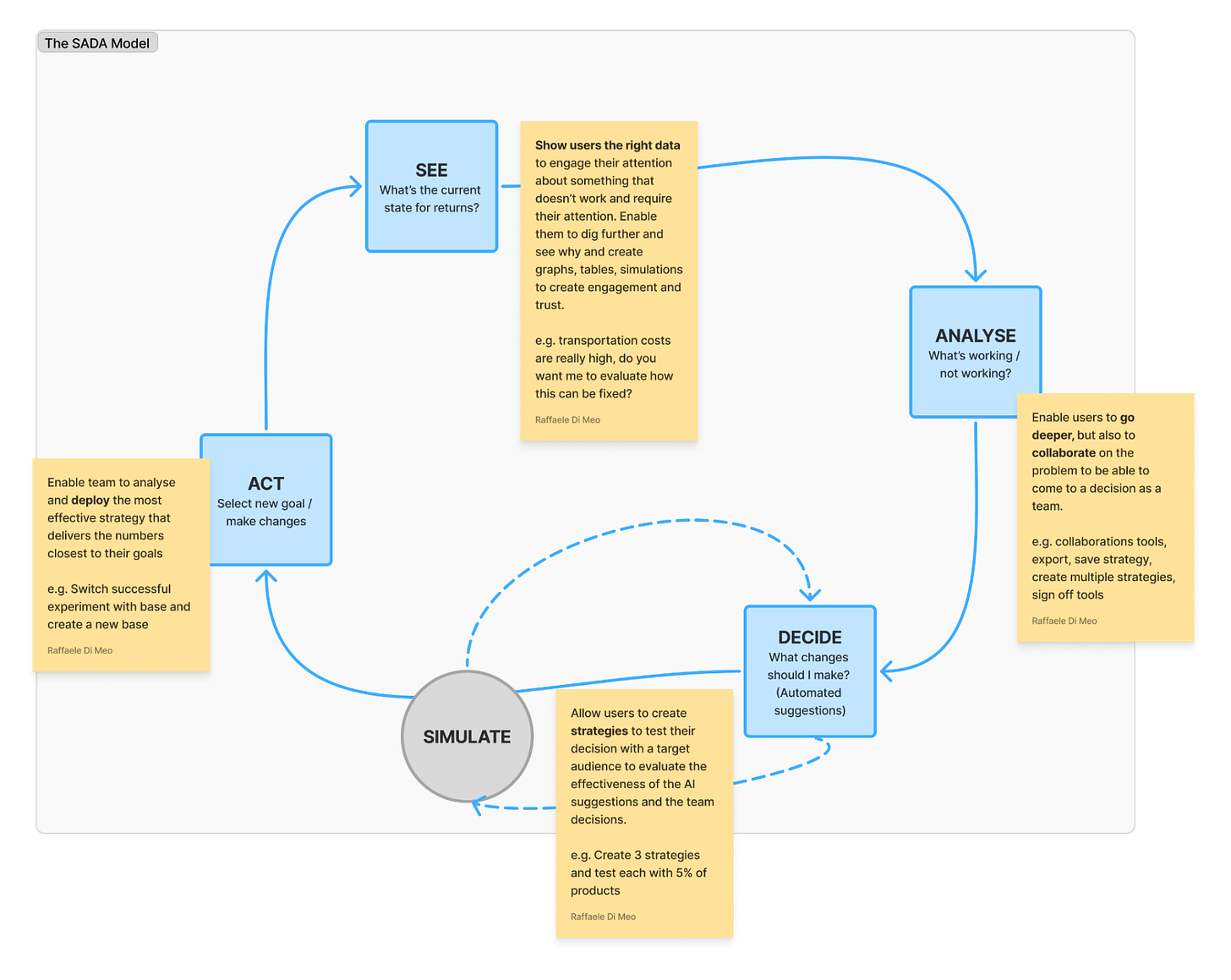

This is where, as a team, we created a model to shape our thinking: the SADA model —See, Analyse, Decide, Act.

Seeing the landscape

Our first step was to look far and wide. We conducted a competitor analysis not only in our immediate space but across various sectors, aiming to understand how others were approaching AI.

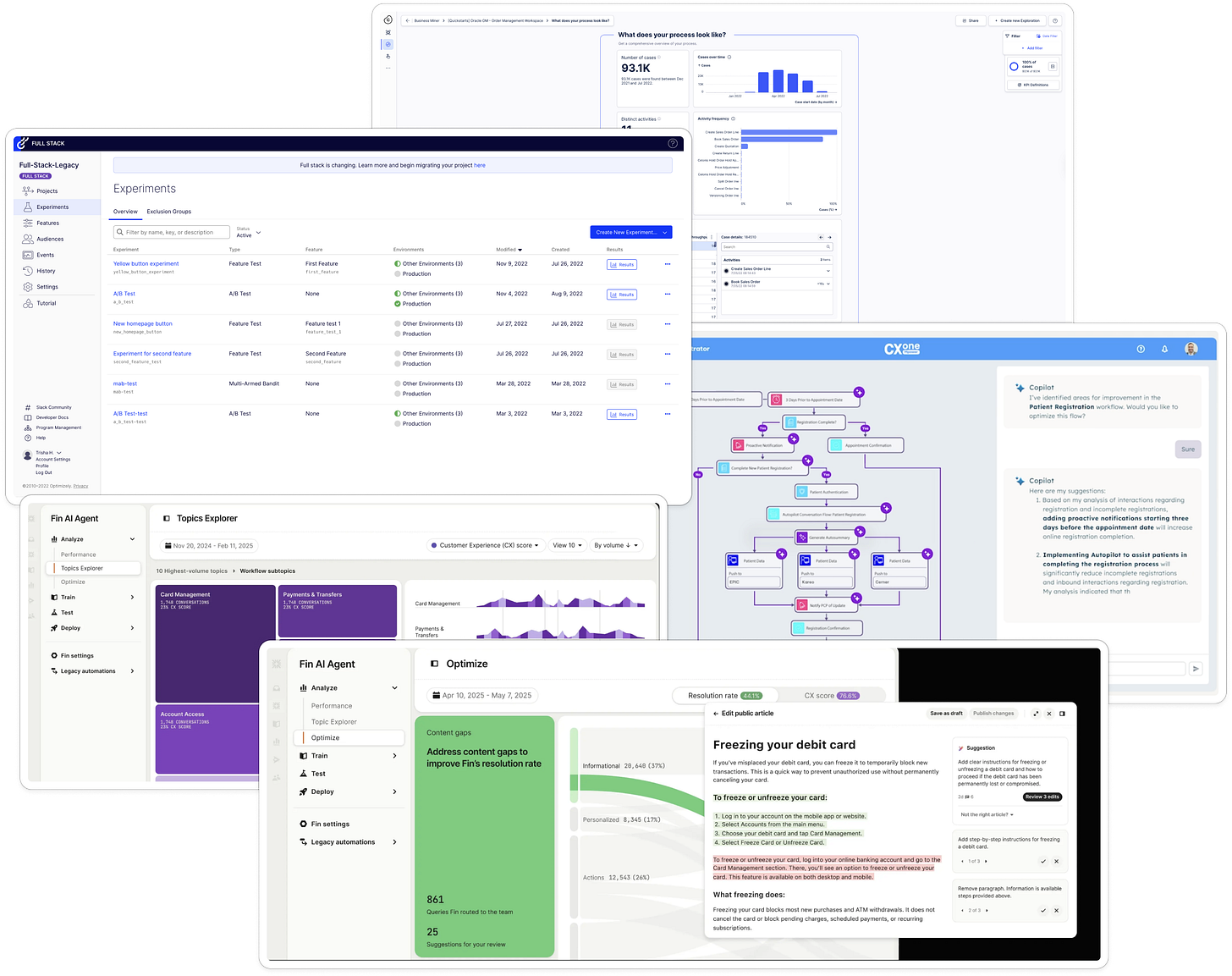

One example that stood out was Intercom’s FIN. Their approach wasn’t just about providing an answer in the moment; it was about training, testing, and deploying in structured stages. That felt far more relevant to the type of complex, high-stakes decisions we were dealing with. Unlike in consumer AI, where a chatbot can provide instant answers, in B2B contexts, decisions affect supply chains, contracts, and millions of products. Trust, process, and validation matter.

Analysing the mistakes

Our first iteration taught us some hard lessons.

We thought: what if we could simply use AI to summarise insights and display them on a page? Retailers could click in, dig deeper, and decide what needed action. It served as a starting point, helping us understand models, prompts, and the kinds of insights that sparked the strongest reactions from retailers.

It wasn’t enough!

What we had built appealed mostly to superusers. The insights the AI generated highlighted significant, systemic issues that needed complex, cross-functional decisions. For example, whether reselling certain SKUs was worth it, or whether switching carriers could reduce costs. These are not one-click changes; they demand analysis, debate, and organisational sign-off.

We had underestimated the complexity of the problem and overestimated what a simple AI summary could deliver. That was a critical learning point.

Get more from Some Designer. Subscribe!

Deciding on the right approach

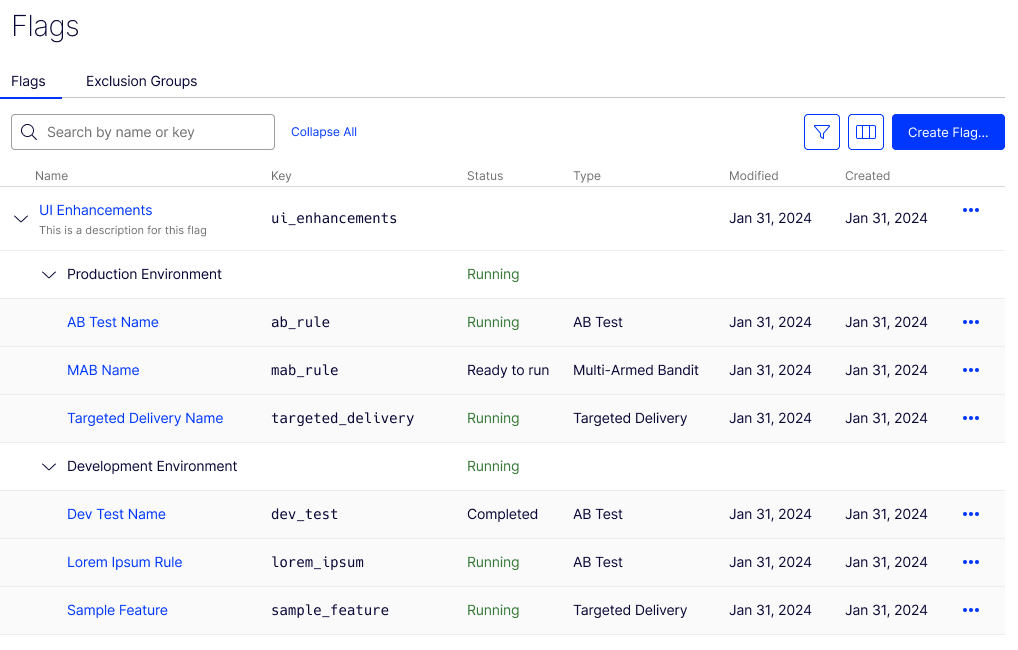

At this stage, we revisited models that had worked in other contexts.

Intercom’s FIN inspired us with its explicit Analyse → Train → Test → Deploy structure. It aligned with the SADA model we had already discussed at Blue Yonder, and gave us a clearer sense of how to create an AI solution that supported retailers step by step, rather than just spitting out answers.

I also drew on past experiences with tools like Optimizely. Their testing framework allows teams to validate changes before committing fully. That felt particularly relevant here. Retailers don’t want to gamble their supply chains on AI recommendations; they want to experiment, measure against baselines, and build confidence.

This was a significant shift. We realised our AI tool couldn’t be about instant answers. It needed to support simulation, experimentation, and trust-building.

Acting with structure

With that in mind, we reframed our solution. Instead of a static dashboard, we’ve been designing a more structured end-to-end tool:

- Set parameters: Retailers define what can and can’t be changed, so AI doesn’t waste time suggesting impossible options.

- Layered insights: Directors can view high-level information, while analysts can go deeper and experiment with numbers before proposing changes.

- AI Orchestration: Users can simulate scenarios to test what happens when they switch carriers, change routes, or adjust which SKUs are resold.

- Experiments: Promising scenarios can be trialled on a subset of product lines, measured against baselines, and run over time to validate impact.

This staged approach mirrors the SADA model:

- See → the AI highlights areas of opportunity.

- Analyse → users explore scenarios and test options.

- Decide→ the organisation agrees which scenario is viable.

- Act → the decision is implemented and monitored in the real world.

Building Trust in AI

At the heart of this, trust is the deciding factor. Retailers won’t adopt AI tools unless they believe the process is transparent, reliable, and accountable. That means showing not only what the AI suggests, but also why it suggests it, and giving retailers the chance to test and validate before committing.

The SADA model provides a helpful framework for this journey. It ensures that AI doesn’t just deliver insights but becomes a partner in decision-making, guiding teams through structured stages rather than rushing them into high-stakes choices.

Consumer AI is not like workplace AI

The temptation with AI is always to promise speed and instant results. But in the world of returns, where decisions ripple across supply chains and balance sheets, speed without trust is meaningless.

By combining broad exploration (from competitors like Intercom to tools like Optimizely) with the structured discipline of the SADA model, we’re starting to create something that can genuinely help retailers cut costs in a way that feels both ambitious and realistic.

And most importantly: we’re learning to design AI not just for retailers, but with them.

Thanks for reading Some Designers! Subscribe for free to receive new posts and support my work.

Some fun stuff from the last few months

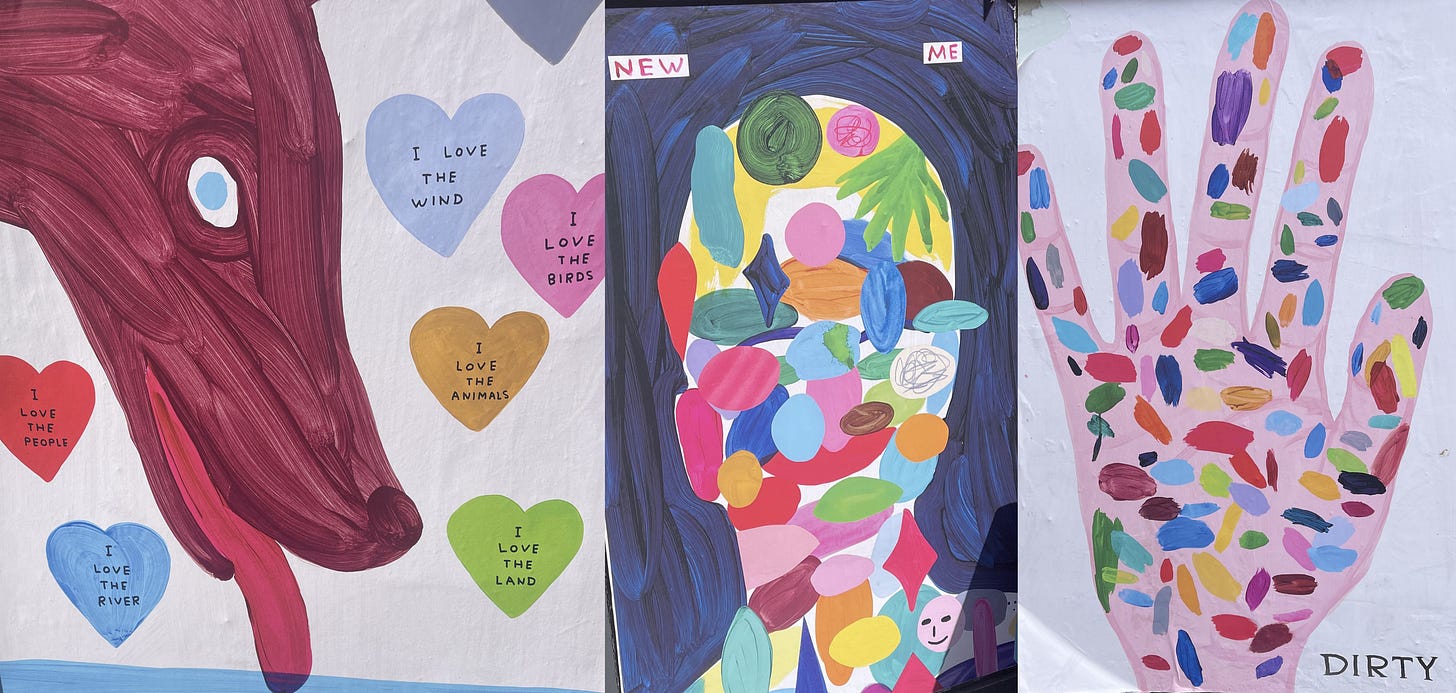

I went walking around the streets of Brighton to find David Shrigley's art dotted around the city!

I did a presentation about the power of research for product succes at Eastbourne Digifest. You can watch it here